Case Study — Design

Render–Optimized Skeumorphic UI

October - December 2022

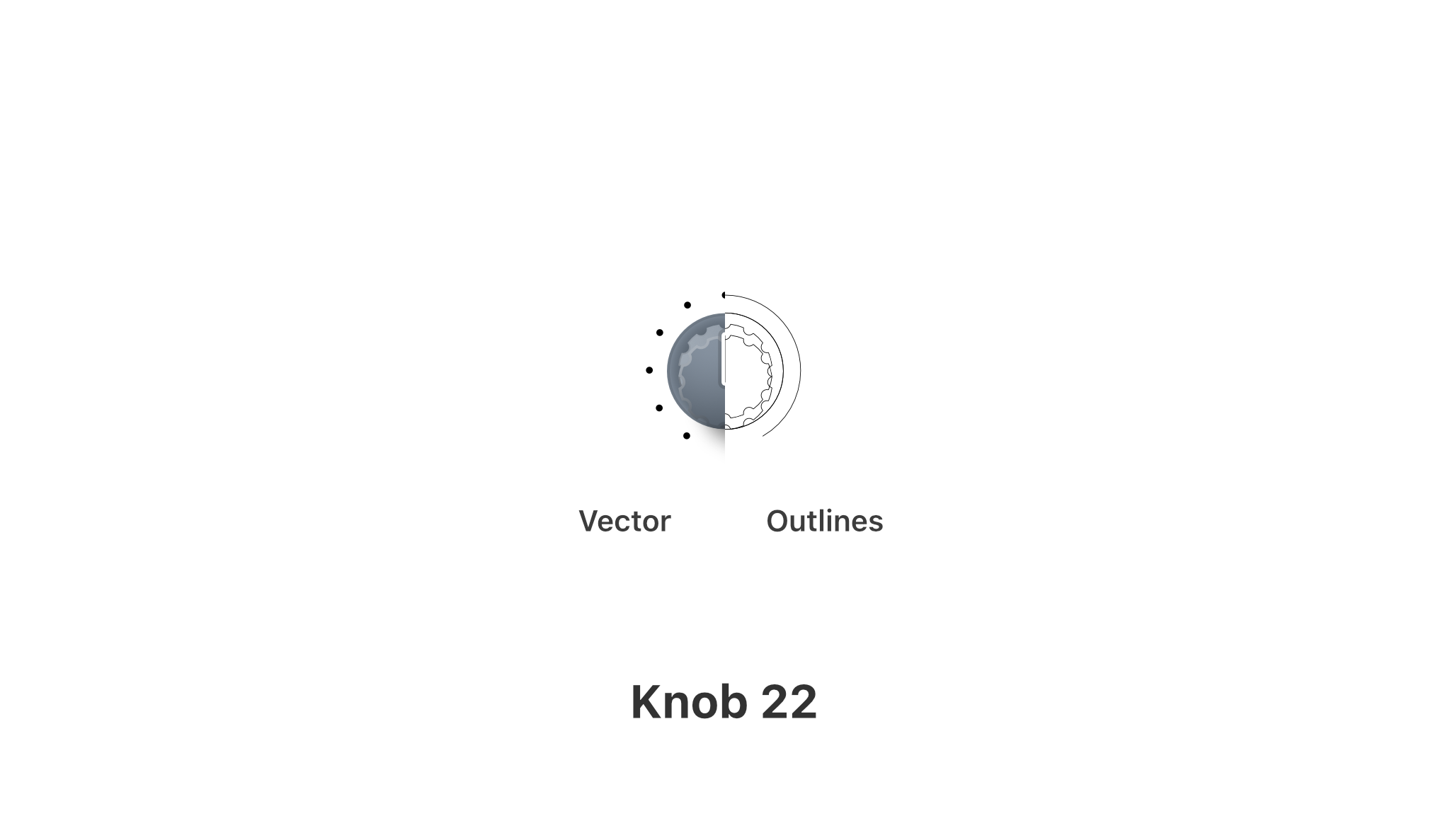

All of the interface assets I created in this project are purely vector-based, excluding some image-based textures.

🎚️ Assets produced:

282 Assets

-

30Panels -

38Knobs -

14Sliders -

26Buttons -

58Accessories -

11Textures -

5Fonts

225 Symbols

Tools used:

Purpose

A music plugin is an interface users interact with to control parameters of an audio processing pipeline.

Important to note, the interface is just a skin. The audio processor is the main consumer of resources. This means interfaces need to be lightweight and mindful of performance overhead.

Audio processing happens in realtime on the CPU. Plugin UIs need to offload as much rendering as possible to the GPU. In practice, this means reducing the amount of draw calls & vertex buffers (typically w/ subranging & instancing) processed by the CPU.

For example, reallocating one (large) VBO vs. updating

separate VBOs for each plugin window instance can free

up CPU. Rather than your

CPU processing each instance (O(n)), it

can process a single VBO for all instances and upload

it to the GPU (O(1)). Optimizations like

this are crucial when you rely on individual-frame performance

optimizations.

Each frame of processing (both audio & UI) needs to happen in as little time as possible. Otherwise, you risk glitching & artifacts in your audio output.

That’s what the buffer size on your audio interface is for–adjusting the amount of audio samples processed per frame. A larger buffer size means more samples are processed, but at the expense of latency (the audio playing back later than it appears on the screen).

A music plugin host dispatches each chunk of samples at a consistent interval (the buffer size). If a frame stalls or exceeds its buffer period, the following frame will not start in time and underrun the buffer.

╭< Frame 1 > ╭< Frame 2 > ╭< Frame 3 >

├ ✓ 10ms ├ ⨯ 20ms ├ ✓ 10ms

│ Pass │ Underrun → │ ArtifactsRendering Options

For rendering music tooling interfaces in software, you have a few options:

- Image textures (e.g. PNG)

- Vector-based renderer

- 3D-based renderer, (can include shading pipelines)

Image textures are the most common, being the easiest and least resource intensive. You get detailed designs without the overhead.

They have their obvious limitations. Fixed sizing is a major issue for distribution across the wide array of desktop screen sizes & resolutions. (Looking at you, ultrawide screen users.)

To scale the window for an image texture-based UI, you need to include different sizes of all your images for each target window scale. Otherwise, elements of your UI will look pixelated/blurry when scaled up.

Another slowdown is the workflow for rendering animation sequences. You have to render each frame as an image, then assemble it as an animation strip. There are many tools that can help automate this process. I need to mention the web version of the classic KnobMan by g200kg that has been used to render knobs & sliders for countless music plugin UIs throughout the decades.

Vector-based renderers solve the sizing issue, but can incur significant performance overhead with the number of moving/animated parts in a music plugin UI.

Lots of vector-based plugins simplify graphical complexity to make up for the increase in overhead, and craft unique stylized looks.

The cost of 3D-based renderers both to develop & create assets for, plus the overhead of rendering in realtime, means most music plugins don’t use them.

Instead, plugins with 3D-based elements just render static images and animate the image sequences. Some plugins blend rendered elements with vector-based interactive elements. This way, continuous animation is smooth without most of the overhead cost.

Project Goals

The goal of this project was to develop a set of vector-based techniques for rendering skeumorphic music plugin UIs.

The idea is that the UI would be drawn:

-

Once (

1) when the plugin window is first opened/drawn - When the user resizes the plugin window, (continuous or throttled/debounced)

This ensures the UI can be truly responsive–and always drawn perfectly to scale–while reducing overhead.

Localized areas are then redrawn as needed for:

- Parameter changes (e.g. user adjusts a knob)

- State changes (e.g. user hovers over a button)

- Animation (e.g. spectrum analyzer graph)

Animation for controls like knobs & sliders can be fully continuous and still stay lightweight.

This eliminates the need for prerendered animation assets, constructing animation strips, and prerendering multiple sizes of the same asset for each target window scale.

Flat-shading is the final component to reducing draw calls & vertex buffers processed by the CPU. No lighting (faster frag shaders), no normals (even faster), no specular; zero physically-based rendering. Just flat colors & gradients. Even shadows are flat gradients.

Process

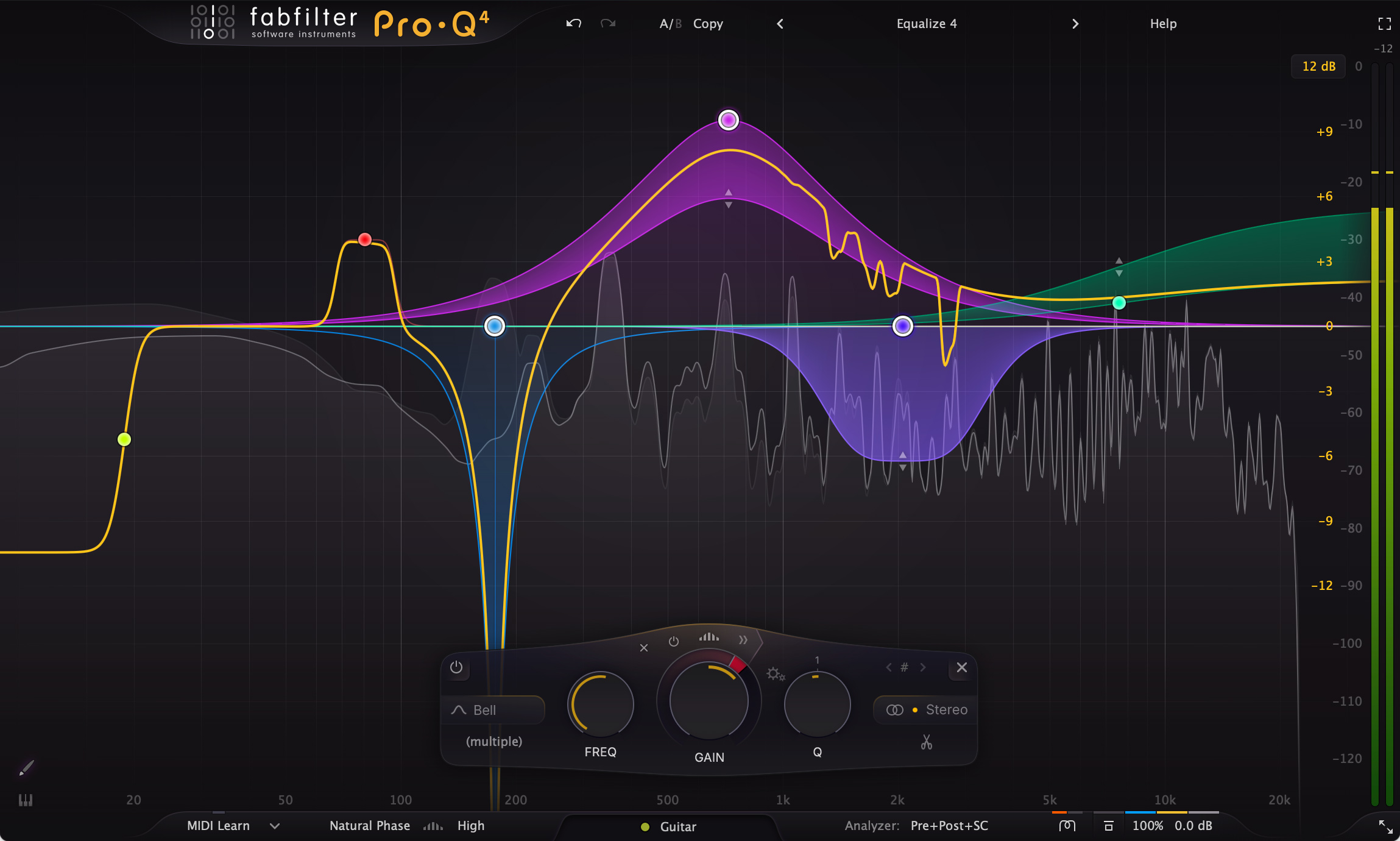

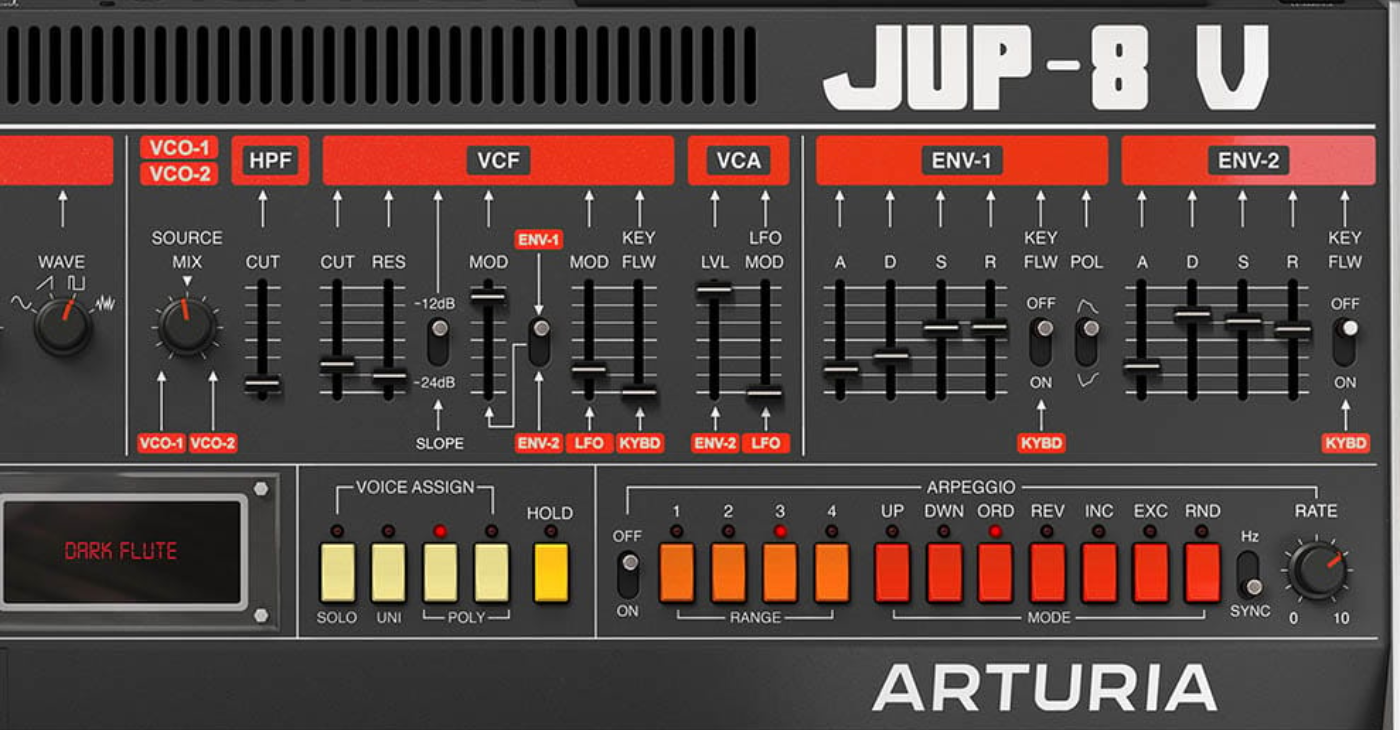

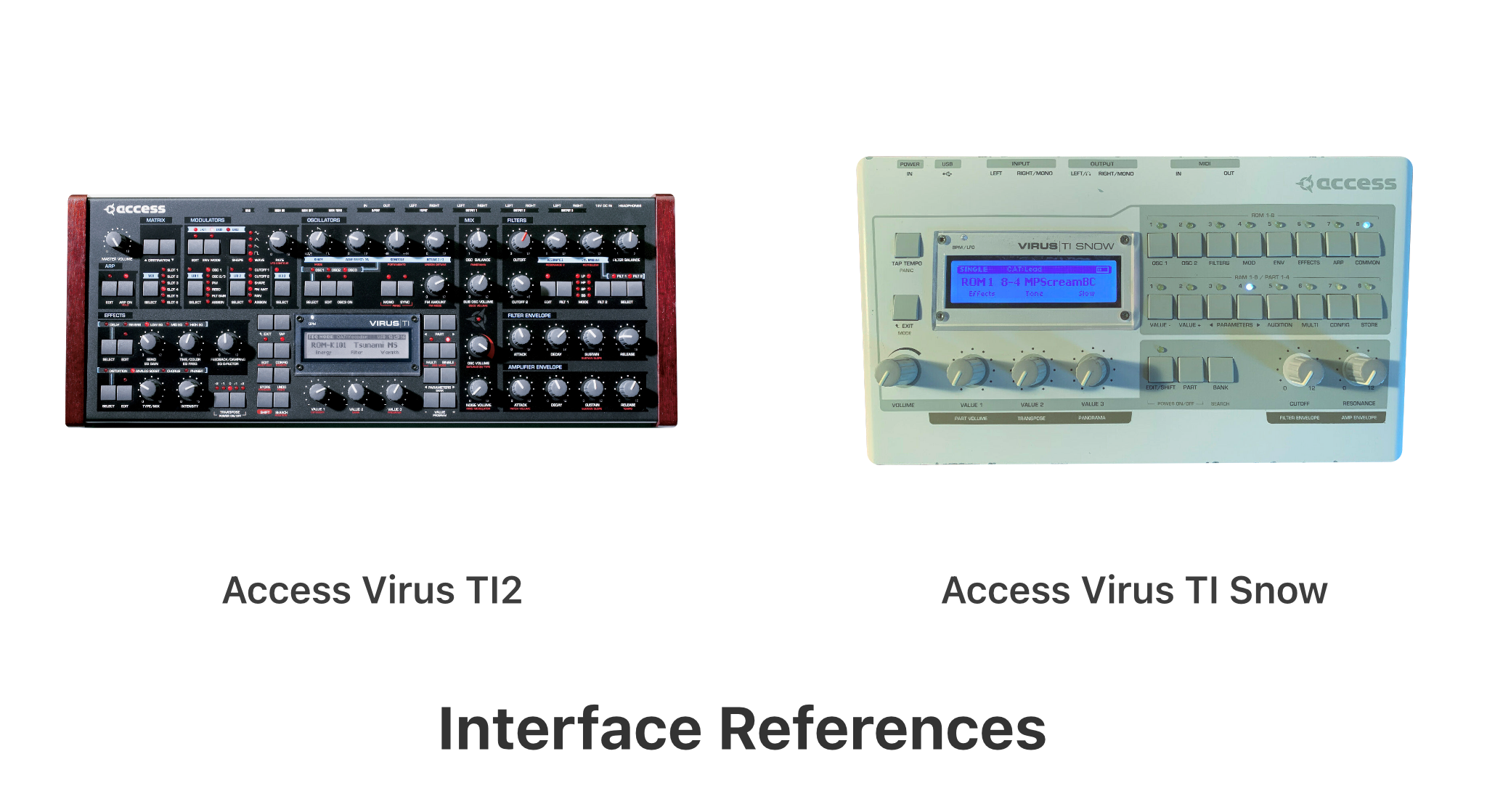

I collected a set of

57 music interface references, ranging

from vintage hardware to modern software.

I analyzed common controls, the styles used to convey functionality, and user experience patterns between interfaces. ’Lotta knobs.

Each control follows a predictable layer architecture. For each control type–knobs, sliders, & buttons–I constructuced reusable architecture for building new controls.

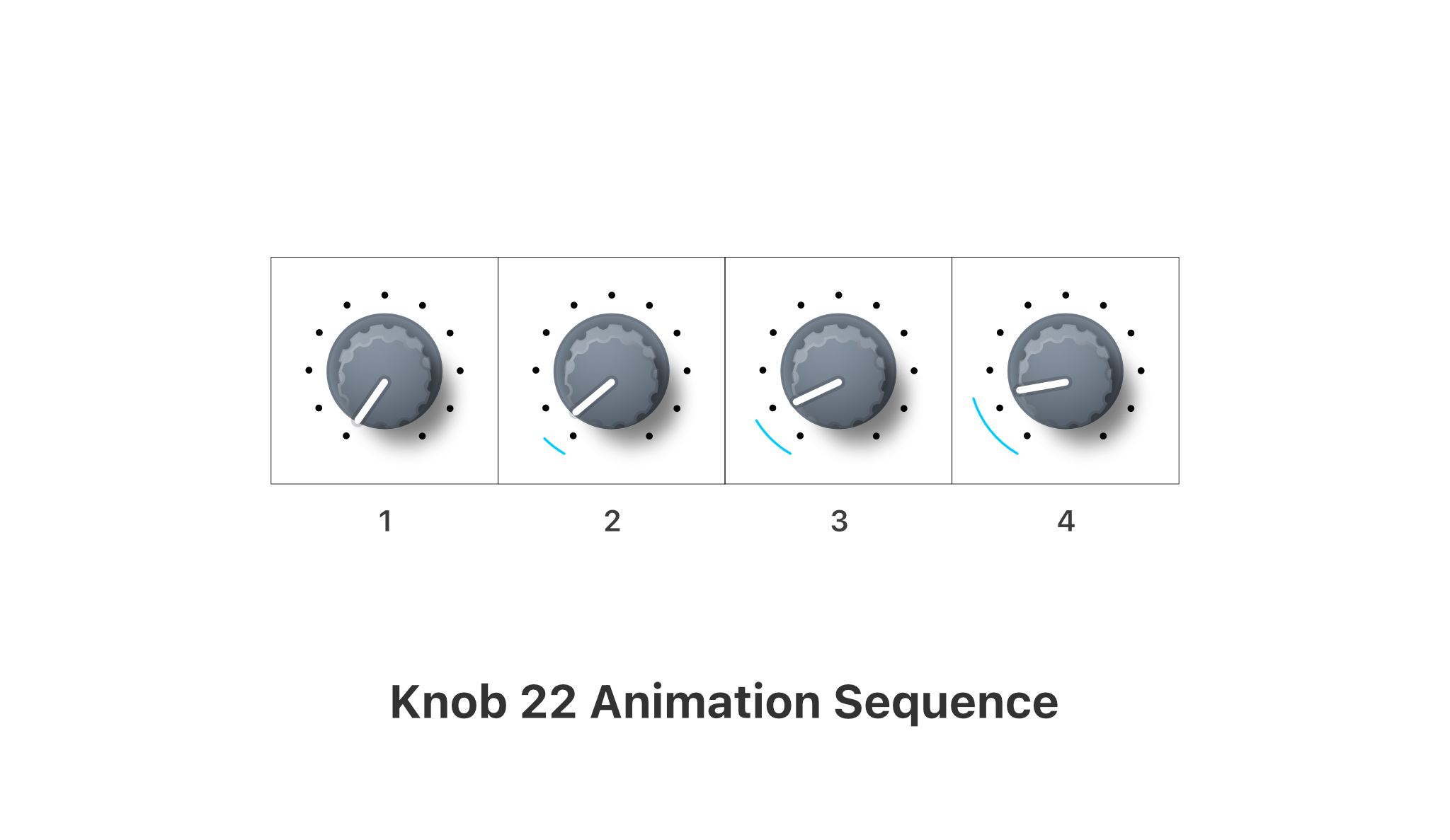

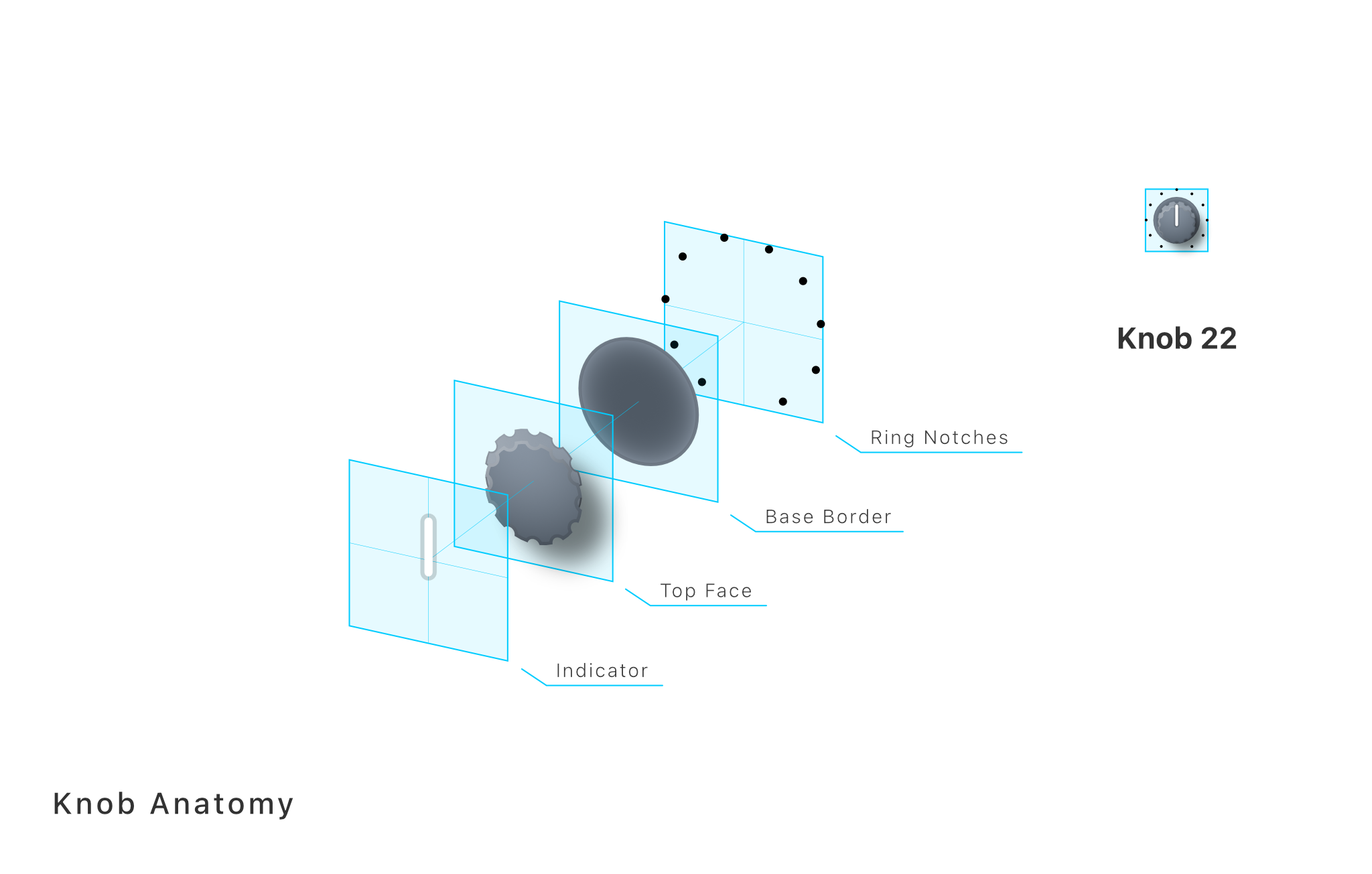

Below is an example of Knob 22 and its

structure.

The two bottom layers, Ring Notches & Base Border, are static.

The two top layers, Top Face & Indicator, can be animated depending on the design of the control.

Certain designs may only need to animate the Indicator CPU-side, animating gradients GPU-side.

A key aspect to these techniques is minimizing the amount of vector data processed by the CPU.

The lower the amount of animated vertices, the lower the CPU processing. The lower the amount of vertices alltogether, the greater the amount of window instances that can be drawn together.

Vertex counts can rise slightly, as long as the high-vertex element either remains static (only drawn on window context change) or can be offloaded & animated GPU-side.

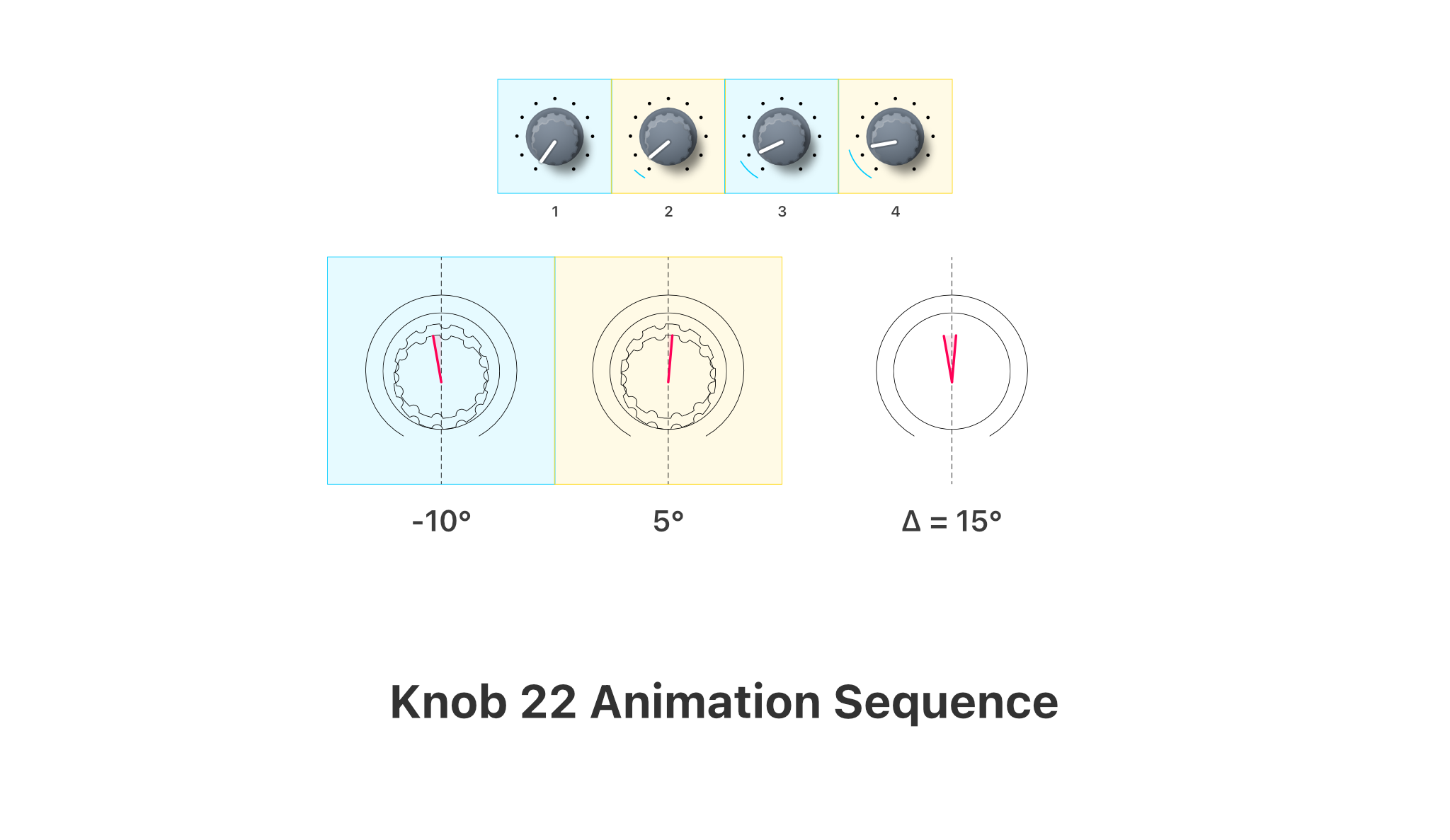

Remember our knob animation from earlier in the article. Rather than continuous animation, costing calculations for each frame of rotation animation, a lookup table can be used to store values used in the animation.

Controls with discrete/stepped values can take this a step further and reduce the number of intervals stored in the LUT.

In fact, you can just precompute curve values into a LUT and reuse for drawing each unchanged frame of animation.

Design choices can close the loop and further reduce processing involved. You don’t need your CPU to calculate vertices of a perfect circle when your GPU can do it. A perfect circle doesn’t need a LUT for rotation values.

The combination of these techniques can reduce CPU load for skeuomorphic-style interfaces, while still allowing for high-quality, responsive, & scalable interfaces.

Fantasy Plugins

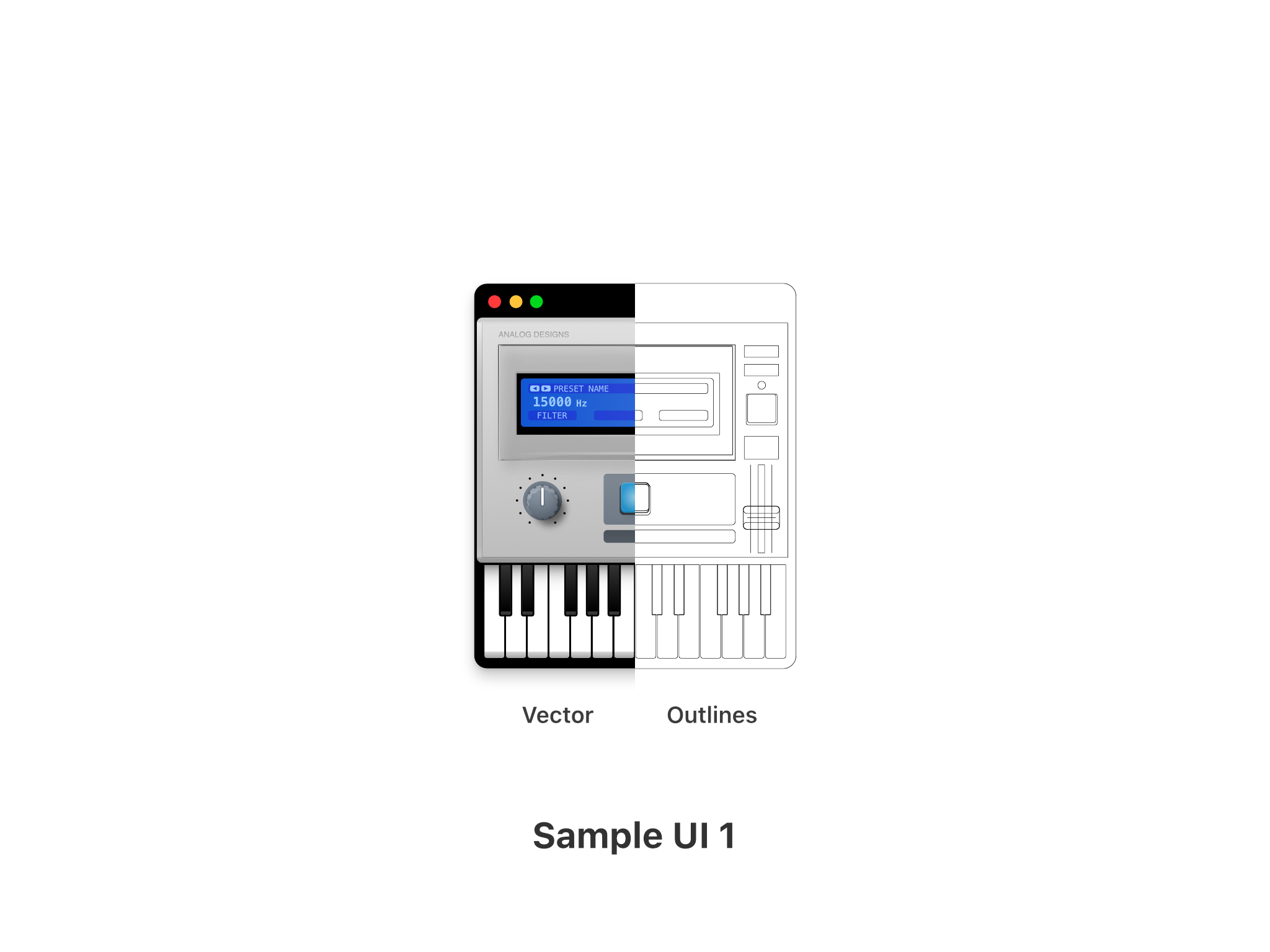

Below are a handful of fantasy music plugin UIs I threw together in a few hours.

None of the interfaces below were orignally designed as a cohesive unit–they were all assembled using separate assets from this set.

All of the interfaces below are fully vector-based.

The legality surrounding the exclusion of woodgrain textures from a set of music plugin UIs is a bit of a gray area. I’ve included a sample with woodgrain below to ensure compliance.

† Sample UI 7 contains an image texture for the woodgrain side panels.

Symbols

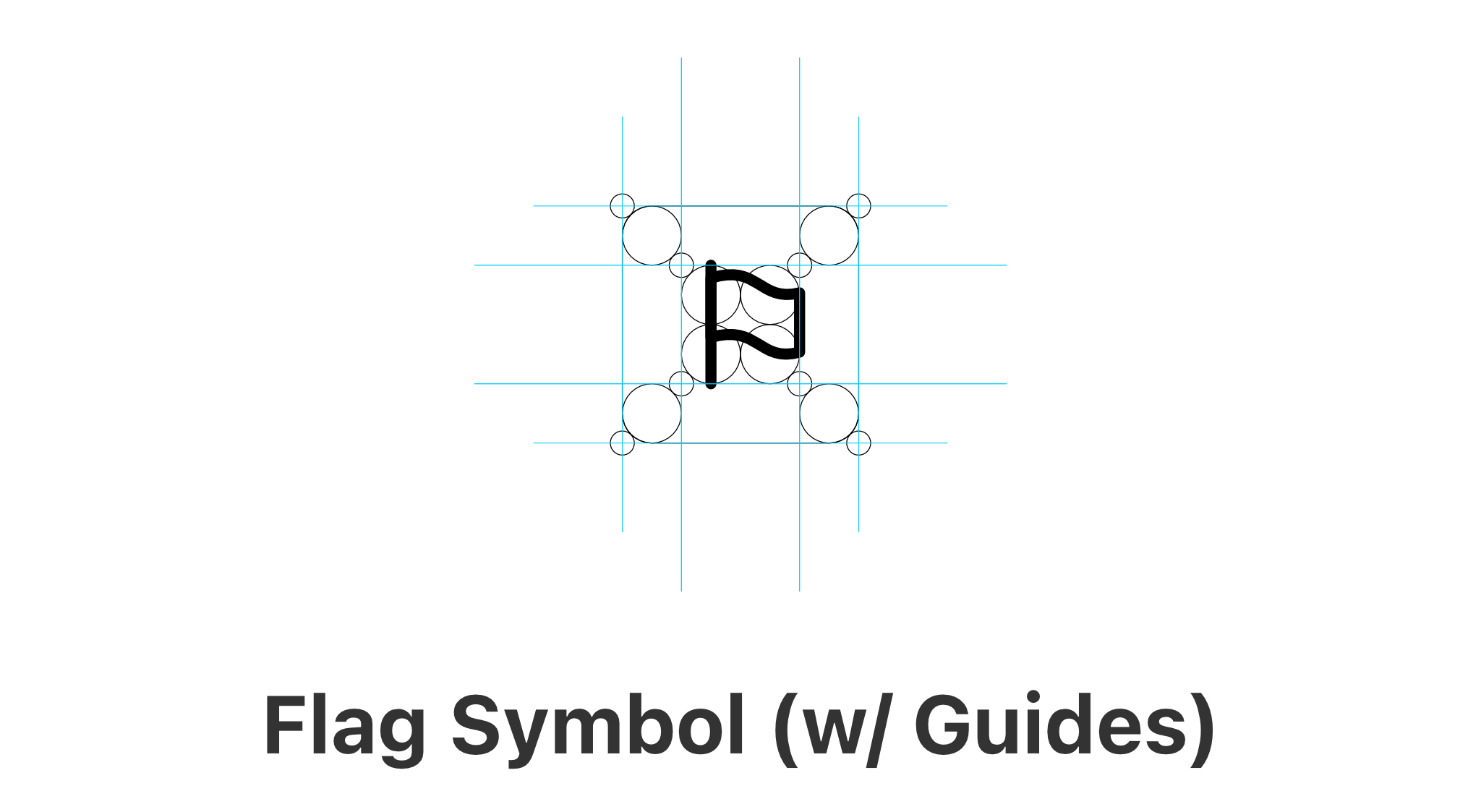

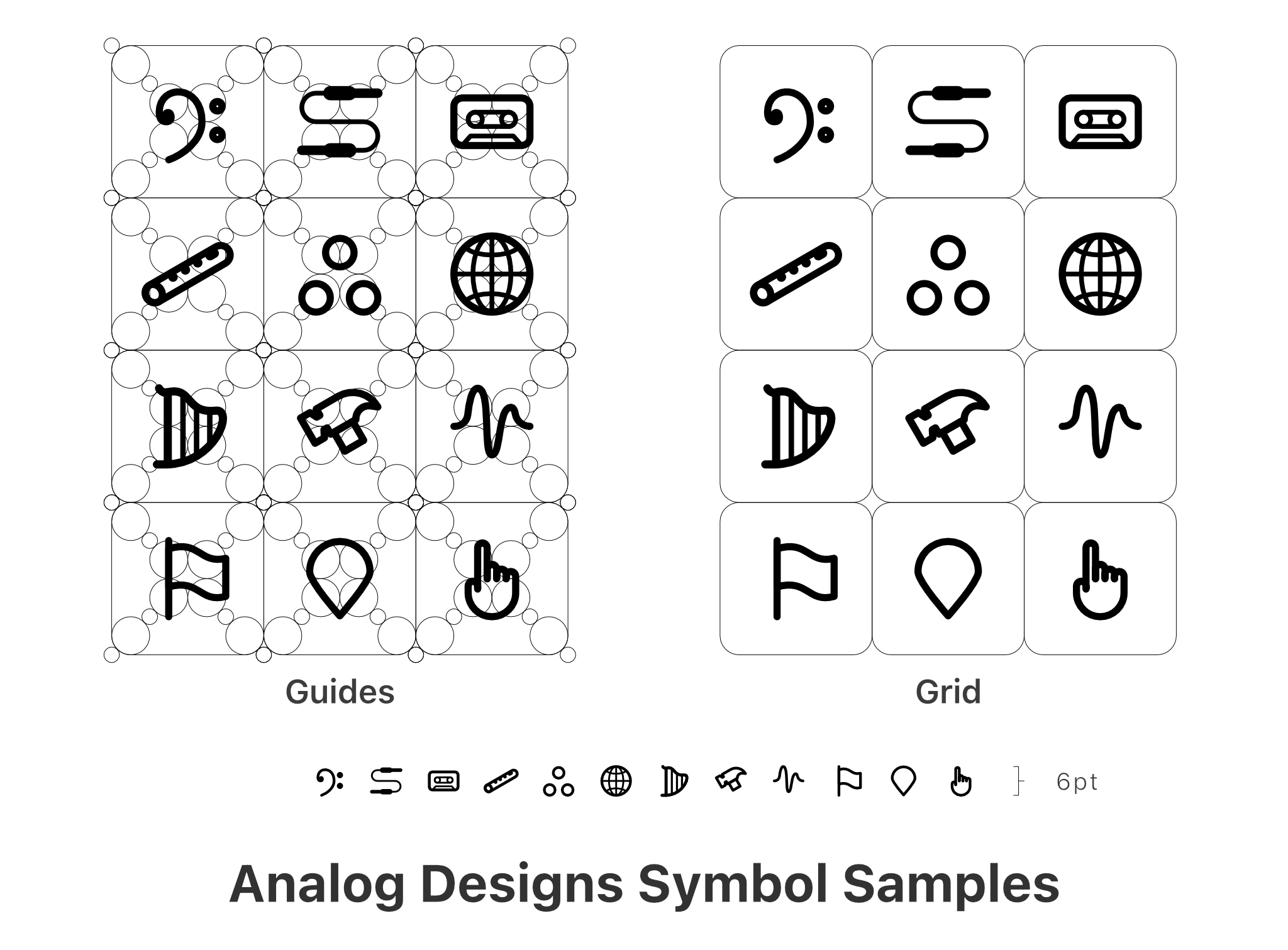

Included with the set are 225

line icon symbols.

I wanted to experiment with an idea I had for constructing the grid & guide system.

I used concepts from shape building to anticipate curves & placements into a set of guides.

My conclusions of the guides were mixed.

The guides did help speed up creation by helping me quickly place curves. But I also found them a bit restrictive for some compositions. I’ll likely stick with something more traditional for most projects.

🎛️ Book a meeting to discuss your project. ➔

alfred.r.duarte@gmail.com Message